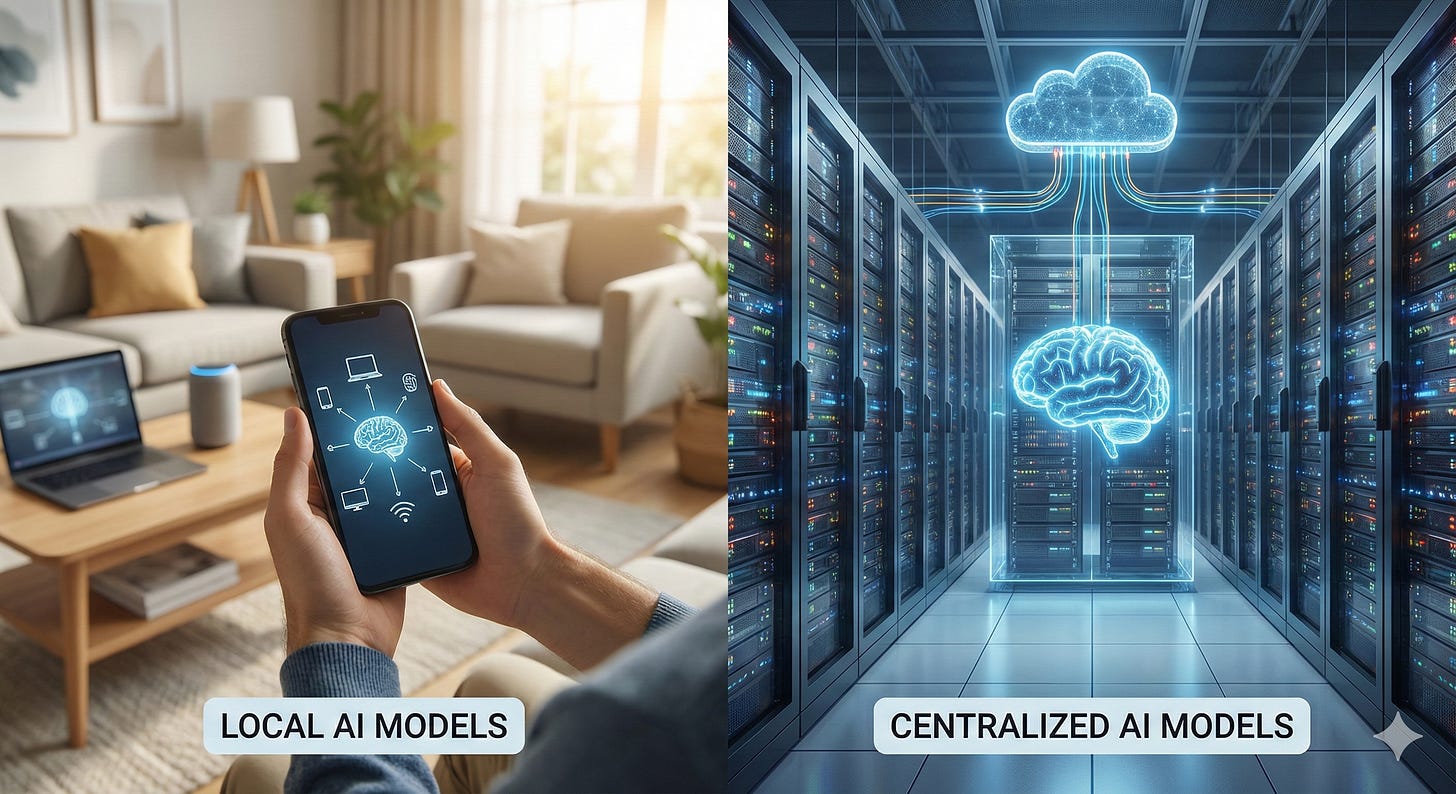

Local AI Models vs. Centralized AI Models

Chips that run locally on the phone will reduce the need for chips that run in the datacenter

Keep reading with a 7-day free trial

Subscribe to TEK2day to keep reading this post and get 7 days of free access to the full post archives.